IvI grant

Researching the interface between Deep Neural Networks and Dynamical Systems

Researching the interface between Deep Neural Networks and Dynamical Systems

Artificial Intelligence has repeatedly been breaking records in core machine learning and computer vision tasks including object recognition, increasing neural network depth, and action classification, mainly due to the success of deep neural networks. Despite their success, the inherent complexity of deep neural networks renders them opaque to in-depth understanding of how their complex capabilities arise from the simple dynamics of the artificial neurons. As a consequence, deep networks are often associated with lack of explainability of predictions, instability, or even lack of transparency when it comes to improving neural network building blocks.

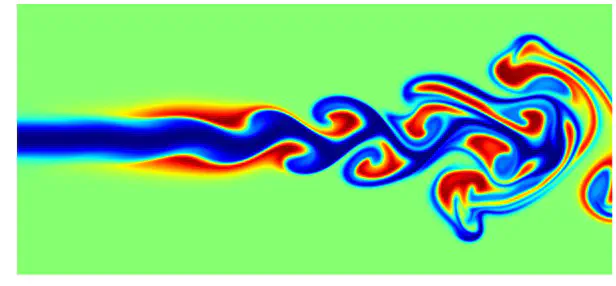

In this project, deep neural networks (DNN) will be studied in the context of complex adaptive systems analysis. The goal is to gain insights into the structural and functional properties of the neural network computational graph resulting from the learning process. Techniques that will be employed include dynamical systems theory and iterative maps (chaotic attractors; Lyapunov exponent), information theory (Shannon entropy, mutual information, multivariate measures such as synergistic information), and network theory. Overarching questions include: How do these (multilayer) networks self-organize to solve a particular task? How is information represented in these systems? Is there a set of fundamental properties underlying the structure and dynamics of deep neural networks?